Design validation

(→Difference with verification) |

(→Difference with verification) |

||

| Line 9: | Line 9: | ||

== Difference with verification == | == Difference with verification == | ||

| − | [[File:ValidationvsVerification.png|thumb|Integration of validation and verification in the design process]] | + | [[File:ValidationvsVerification.png|thumb|400 px|Integration of validation and verification in the design process]] |

Design verification and validation, although often combined, are two different things. They are not necessarily applied in a specific order or a predefined structure. Most of the time, both form a constant process that is applied at the same time throughout the whole project. | Design verification and validation, although often combined, are two different things. They are not necessarily applied in a specific order or a predefined structure. Most of the time, both form a constant process that is applied at the same time throughout the whole project. | ||

Revision as of 01:25, 20 March 2022

The Ford company realized in 1957 that making a perfectly functional car was not enough to achieve commercial success. Indeed, nobody wanted to buy it, and the company suffered a loss of 250 million dollars [1]. The goal of validation is to ensure that a design meets the user's needs, and it is just as important as producing a functional design. Indeed, an inadequate validation will result in designing an undesired or unsuitable product, wasting significant amounts of resources, money, and time. The increase in complexity and duration of design projects often causes the initial objectives to get forgotten. Validation closes the production loop and ensures that the functionality intended for the user is fulfilled. Traditionally, validation is performed at the end of the product design process, through direct user testing, among other methods. However, a newer validation approach is called User-Centered Design and consists of early user integration in the design process, which spreads the validation over the whole project timeline.

Contents |

Big Idea

Definition of design validation

According to the U.S. Food and Drugs Administration, “Design validation means establishing by objective evidence that device specifications conform with user needs and intended use(s).“[2]. Simply put, validating a design means answering the following question: did you design the right product?

Difference with verification

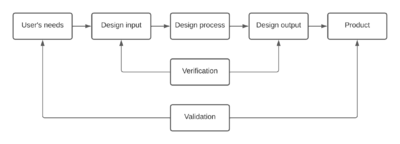

Design verification and validation, although often combined, are two different things. They are not necessarily applied in a specific order or a predefined structure. Most of the time, both form a constant process that is applied at the same time throughout the whole project.

Unlike validation, the main question designers try to answer when performing verification is: "Did you design the product right?". According to the FDA, "Verification means confirmation by examination and provision of objective evidence that specified requirements have been fulfilled" (quote). In other words, the purpose of verification is to ensure that the various components of the system are working as expected. It compares the design inputs with the design outputs and verifies their correspondence. This is usually done through testing, inspection, and analysis of a prototype, or calculations and simulations for a building for example. Verification allows proving that the product that has been built is the product that the designers wanted to build, while validation allows making sure that this product is really useful for the users. The examples below (quote) help to understand this difference.

User-Centered Design

A newer validation approach is called User-Centered Design and consists of early user integration in the design process, which spreads out the validation in time. A notable example of this process is open-source collaboration, in which users can provide real-time feedback and suggestions.

Application

Methods

Validation methods can be divided into 2 main categories: qualitative and quantitative. They can of course be combined, and they do not all allow to analyze the product in its entirety, but only on certain aspects such as ergonomics for example. Many of these methods are mainly used in the IT field, for applications or websites. However, they are completely extrapolatable to any field involving the creation of a product or a service, and therefore to many projects. Some of them are detailed below.

| Qualitative | Quantitative |

|---|---|

| Usability test | Data analysis |

| User feedback | Metrics monitoring |

| Heuristics analysis | A/B testing |

| Pilot User Test | Quantitative survey |

| Stakeholder interview | Pilot test |

Qualitative methods

Heuristic evaluation

The purpose of this type of validation is to ensure that the product meets a set of pre-established requirements. It is a powerful way to ensure that the product is relevant enough before submitting it to users' experimentation. The heuristics used can be customized for the company and the type of product to be analyzed, or pre-recorded evaluation heuristics, commonly used in the field.

Heuristics are a good way to establish a pre-validation of a product without directly asking the users. They can therefore be preferred in the case of a confidential project. It is a simple, inexpensive, and quick method to set up. It can be done at the end of the design process for the final validation as well as throughout the process to ensure that a project is on the right development path. Its effectiveness depends largely on the quality and completeness of the criteria selected.

A good example is Nielsen’s Heuristics, which are used to validate machine-user interfaces of computer software. It is a ten points checklist that highlights as many requirements as possible that need to be fulfilled by a piece of software. If even one of these points is not checked, Jakob Nielsen argues that there will be inevitable flaws in the use of the product. Many of these criteria can be adapted to other types of products, and a similar heuristic can be created in any domain to ensure that our design meets the user's needs. It is also possible to assign weights to the different criteria according to their importance, to focus the analysis on the most critical points.

The 10 Nielsen’s Heuristics include:

- Visibility of System Status

The user of the website, digital application, or other digital product must understand what is going on with appropriate feedback (notifications, progress bar...).

- Correspondence between the system and the real world

Words, sentences, information, graphic elements, must be natural and look like the experiences the user already had.

- Control and freedom

Users can easily exit the system in case of error, redo or cancel an action.

- Consistency and standards

Respect for standards, in terms of functionality, navigation, graphics, language used, etc. Similar elements should also look similar.

- Error prevention

The design is conceived so that the user does not make serious mistakes and can always correct their mistakes.

- Recognition rather than recall

Reduce the mental load with clear instructions, showing the path taken, to minimize the effort of memorization during use.

- Flexibility and efficiency

The interface is convenient for the user regardless of their digital maturity. For example, the keyboard shortcuts are not very useful for a novice. On the other hand, for an expert who must use the product regularly, it is a productivity gain.

- Aesthetic and minimalist design

The content, graphic design, etc. must have a reason to exist.

- Error recognition, diagnosis, and repair

Error messages are clear, simple, and constructive.

- Help and documentation

If necessary, always provide easily accessible instructions to answer users' questions and needs.

Pilot User Test

The Pilot User Test allows testing the product continuously, in a typical environment of use, and in real-time. The goal is to analyze if the product can exist in the real world as it was designed, to highlight defects in its use (time, ergonomics, etc.) or potential risks.

The implementation of this test requires a group of test users. They must be experienced or trained beforehand: they must know how to share their opinion in an efficient and exhaustive way, they must know how to test as many functionalities as possible and they must know where to look for possible problems or ways of using the product that were not taken into account by the designers. These test users then receive a scenario to follow which is a part of the typical use of the product, and should then give their opinion on the overall experience and rate different aspects of the product. This feedback can also be done continuously, both after and during use.

This test should be combined with measurement and analysis tools to get the most out of it. Indeed, the verification of the product can be done in parallel by trying to find possible bugs, to measure the execution time and the global functioning of the product, in addition to validating its design. At this stage of product development, the object under test is often a prototype and not the final product. This test can therefore be iterated on the different successive prototypes.

Quantitative methods

Analytics and benchmarking

A good quantitative method to validate a product is to define measurable values that will evaluate different aspects of its design. This data can be obtained through digital tools whose aims are to gather quantified data on the use of the product: number of testers at the same time, execution time, mean task realization time, etc. Then, it is easy to compare two versions of the product based on numerical values, applying these measures to both versions. This is a good way to estimate if a design decision is good. The disadvantage of this method is that it is retrospective: a new design must be analyzed from the previous one to validate it. The systematic collection of data, therefore, lengthens the process. Moreover, each version should have as few changes as possible, so that a change in the measured values can be associated with a specific design change.

One way to improve this method is to combine it with a benchmark of the sector in which the product is included. A benchmark allows to directly compare the product with industry standards, by comparing the collected measurements with those of existing products. This method can be useful for companies with experience in a field, who can compare their new products with older ones that have performed particularly well. Although not encouraged, it is also a way to avoid the iterative process of successive analysis for each design change, by analyzing only the final version of the product.

A/B testing

A/B testing is a technique that consists of proposing several variants of the same object that differ according to a single criterion in order to determine which version gives the best results with consumers. It is a technique particularly used in online communication where it is now possible to test with a sample of people several versions of the same web page, the same mobile application, the same email, or an advertising banner in order to choose the one that is the most effective and to use it on a large scale.

For example, if we apply A/B testing to the design of a car, it will consist of varying the color of the car, its maximum speed, its shape, or perhaps an element of its packaging and observing which version will be the most convincing. The process of A/B testing is therefore:

- Defining your objective

- Identifying your target precisely

- Varying an element of your product

- Letting your audience accept these variations, interact and react to your new proposals

- Collecting the results of your test

- Adapting your product design (but it could also be your communication, your sales strategy, etc.)

Examples of successful validation

Limitations

- Lack of awareness of its tremendous benefits especially in emerging countries like China, India, Brazil, and Argentina.

- External pressure (in pharmaceuticals where big amount of funds is invested.)

- Increase of design complexity (this point is a key challenge in various domains. The variables to create the right product is ever-changing and with-it outdated methods of validation fail to produce the right product quite consistently).

References

- ↑ Bahill, A. T., & Henderson, S. J. (2005). Requirements Development, Verification, and Validation Exhibited in Famous Failures. (Www.Interscience.Wiley.Com). Syst Eng, 8, 1–14. https://doi.org/10.1002/sys.20017

- ↑ eCFR :: 21 CFR 820.3 -- Definitions. (n.d.). Retrieved February 18, 2022, from https://www.ecfr.gov/current/title-21/chapter-I/subchapter-H/part-820/subpart-A/section-820.3