Data Quality Management

Contents |

Abstract

Data quality has a significant impact on both the efficiency and effectiveness on projects[1]. As part of the digital transformation, data has become more readily available and more important than ever before. Project teams are performing data analytics to leverage key resources and optimise processes to gain insight and make informed decisions. As such, data is becomingly increasingly valuable to project managers who are driving decision making based on high-quality data insight. However, if data quality is poor, project managers risk taking misguided decisions based on unreliable data. DQM can be utilised as a tool to ensure communication and decisions are being driven based on high-quality data.

Data quality management (DQM) serves the objective of continuously improving the quality of data relevant to a project or program[2]. It is important to understand that the end goal of DQM is not about simply improving data quality in the interest of having high-quality data, but rather to achieve desired outcomes that rely on high-quality data[3]. DQM revolves around the management of people, processes, technology and data through coordinated activities aimed at improving data quality[4]. The article explores DQM as a tool that can be applied by project and program managers alike, the article delves deeper into the meaning behind the term data quality and investigates the process for DQM as reflected by the ISO 8000-61 framework.

Overview

DQM in PMBOK

One can argue that data quality has the ability to impact each knowledge area of project management. For example, a supply chain planner may expect a given good to be received based on data for a vendor lead time, however if the data quality is poor the supply chain planner may be misguided in terms project time management through inaccurate schedule development and planning. Similarly, a project cost controller overseeing a construction project may provide inaccurate input for project cost management if the data quality for different cost parameters is inaccurate. Likewise, a risk consultant using master data for risk quantification may provide managers with an inaccurate risk assessment which could detrimentally influence a project through poor project risk management due to low-quality data. Data is used everywhere and as such each area of project management can be influenced by data quality. However some areas of project management are more reliant on high quality data than others to successfully function. As per ISO 8000-2 guidelines, data is defined as "reinterpretable representation of information in a formalised manner suitable for communication, interpretation, or processing"[5], the key words in the definition are information and communication. Based on this definition, it is perhaps within project communications management of the PMBOK® where DQM is most relevant.

According to the PMBOK® guidelines, project communications management refers to the processes that are necessary for the timely planning, creation, collection, distribution, control, monitoring, and disposition of project information.

Data Quality

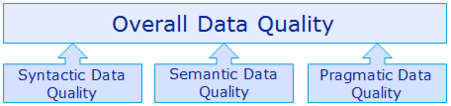

An important element of DQM is understanding the dimensions and complexity of the term data quality. The term is defined by ISO 8000-2 as the "degree to which a set of inherent characteristics of data fulfils requirements"[6]. However, data quality is a multifaceted concept which considers various dimensions and it can therefore be difficult to define in one sentence, let alone measure data quality[7]. Data quality dimensions in literature consider accuracy, completeness, consistency, integrity, representation, timeliness, uniqueness and validity. The ISO 8000-8 guidelines divide data quality into three categories based on semiotic theory. Semiotic theory concerns the usage of symbols such as letters and numbers to communicate information[8]. The three semiotic categories that are relevant in regard to discussing data quality are; syntactic quality, semantic quality, and pragmatic quality[9]. As illustrated in figure 2.A, these categories provide a base for measuring data quality and are important terms to recognize for understanding DQM.

Syntactic Data Quality

The goal of syntactic data quality is consistency. Consistency concerns the use of consistent syntax and symbolic representation for particular data. Syntactic quality can be measured based on percentage of inconsistencies in data values. Consistency is often developed through a set of rules concerning syntax for data input[10].

Semantic Data Quality

The goal of semantic data quality is accuracy and comprehensiveness. Accuracy can be defined as the degree of conformity a data element holds compared to the truth of the real world. Comprehensiveness can be understood as the extent to which relevant states in the real world system are represented in a data warehouse[11]. Properties that fall under semantic quality are completeness, unambiguity, meaningfulness and correctness[12].

Pragmatic Data Quality

The goal of pragmatic data quality concerns usability and usefulness. Usability refers to how easy it is for a stakeholder to be able to effectively interact and access the data while usefulness refers to the ability of the data to support a stakeholder in accomplishing tasks and aid decision-making. Data may be more useful/usable for some stakeholders than others, depending on their ability to interpret the data and the context of their tasks. Pragmatic data quality involves the properties of timeliness, conciseness, accessibility, reputability and understood[13].

Figure 2.B highlights the various categories addressed above and summarises each quality category goal, properties and example methods for measuring empirically. There are various empirical models that build on the semiotic theory for categorizing data quality, some of which use different regression and weighting models to empirically measure data quality. These can be studied further in Moody, et al's paper: Evaluating the quality of process models: empirical analysis of a quality framework.

Fundamental Principles of DQM

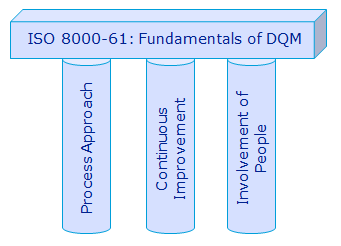

It is important to recognize that DQM often functions as one of many building blocks of a larger data governance project or program[14]. Figure 3.A highlights the various tools and building blocks which make up a data governance project/program, these include; DQM, data architecture, metadata management, master data management, data distribution, data security, and information lifecycle management. Therefore, DQM does not touch upon these other building blocks of data governance, however, there is often a strong interplay between the different functions. The ISO 8000-61 guidelines define the three fundamentals of a DQM as: process approach, continuous improvement, and involvement of people. The three fundamental principles of DQM act as pillars in building and managing a program for the assurance of high-quality data.

ISO 8000-61 Principles of DQM

Process Approach

The first fundamental principle is the process approach, this principle concerns defining and operating the processes that use, create and update relevant data[15]. This principle states that a successful DQM program requires a process approach to managing key process activities. The process approach involves defining and operating recurring and reliable processes to support DQM.

Continuous Improvement

The principle of continuous improvement forms the second fundamental, this principle establishes the idea that data most be constantly improved through effective measurement, remediation and corrective action of data nonconformities. As stated by the ISO 8000-61 guidelines, continuous improvement depends on "analysing, tracing and removing the root causes of poor data quality" which may require adjustments to faulty processes[16]. This fundamental is closely linked to the concept of Kaizen.

Involvement of People

The third fundamental principle highlights the importance of people to DQM. This principle states that different responsibilities are allocated to individuals at different levels within a program or organisation. Top level management provide necessary and sufficient resources and direct the program towards achieving specific goals in regard to data quality. Data specialist perform activities such as implementation of processes, intervention, control and the embedding of future processes for continuous improvement. While end users perform direct data processing activities such as input of data and analysis. End users typically have the greatest direct influence on actual data quality as these are also the individuals in closest contact to the data itself[17].

ISO 8000-61 Framework for the DQM Process

The Basic Structure of the DQM Process

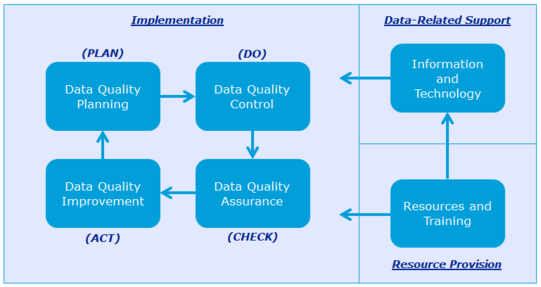

The basic structure of the DQM process is illustrated in figure 4.A. The structure is illustrated by three overarching and interlinked processes; implementation, data-related support, and resource provision.[18].

- Implementation Process: this stage is aimed at achieving continual improvement of data quality using a systematic and cyclic plan-do-check-act process. The cycle involves planning (plan), control (do), assurance (control), and improvement (act).

- Data-Related Support: this stage enables the implementation process by providing information and technology related to data management. As the figure 4.A highlights, it provides an input to the implementation stage.

- Resource Provision: this stage involves training of individuals performing data related tasks and providing sufficient resources to effectively and efficiently manage the implementation and data-related support processes.

The Plan-Do-Check-Act (PDCA) cycle of the implementation stage is a process promoting more effective and efficient data quality through continuous improvement. The PDCA cycle can be briefly described as follows:[19].

- Plan: developing strategic plans of action for implementation and delivery of results in regard to data quality requirements. Plan to enhance quality.

- Do: implement and conduct plan for data quality control.

- Check: monitor, measure and compare results against data requirements. Conduct performance reporting.

- Act: remediate for continuous improvement of process. Also concerns preventing and reducing undesired effects monitored in the check stage.

The Detailed Structure of the DQM Process

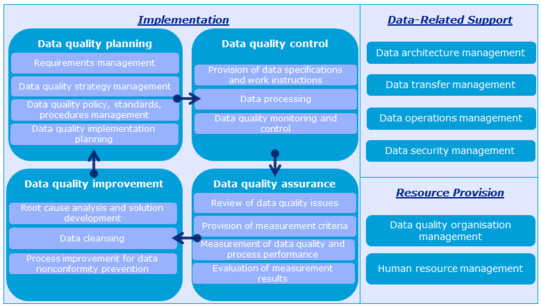

Figure 4.B reveals the lower levels of the DQM process. These are explained accordingly: EXPLAIN CONCEPTS OF DETAILED STRUCTURE

Implementation

Data-Related Support

Resource Provision

Benefits and Difficulties of DQM

Glossary

- DQM: Data Quality Management

- ISO: International Organisation for Standardization

- PDCA: Plan-Do-Check-Act

- PMBOK: Project Management Body of Knowledge

Bibliography

Batini, C. and Scannapieco, M. (2006): Data Quality: Concepts, Methodologies and Techniques. Berlin: Springer. This book explores various concepts, methodologies and techniques involving data quality processes. It provides a solid introduction to the topic of data quality.

ISO 8000-2. (2017): Data Quality - Part 2: Vocabulary. International Organisation for Standardisation. Ref: ISO 8000-2:2017(E). This is the ISO standard for data quality vocabulary. It provides clear and authoritative definitions of data quality terms.

ISO 8000-61. (2016): Data Quality - Part 61: Data Quality Management: Process Reference Model. International Organisation for Standardisation. Ref: ISO 8000-61:2016(E). This is the ISO standard for data quality management processes. It provides an excellent and concise overview of the industry best practices regarding DQM processes, explaining the fundamental principles behind DQM and elaborating on process procedures through a framework guide.

ISO 8000-8. (2015): Data Quality - Part 8: Information and Data Quality: Concepts and Measuring. International Organisation for Standardisation. Ref: ISO 8000-2:2017(E). This is the ISO standard data quality concepts and measuring theory. It introduces the semiotic theories for data quality.

Knowledgent (2014): Building a Successful DQM Program. Knowledgent White Paper Series. This paper provides an introduction to DQM within enterprise information management, explaining the basic concepts behind DQM and also explaining the data quality cycle framework.

PMI. (2013): A Guide to the Project Management Body of Knowledge (PMBOK® Guide), 5th ed. Pennsylvania USA, pg. ##-##.

Shanks, G. and Darke, P. (1998): Understanding Data Quality in a Data Warehouse: A Semiotic Approach. Massachusetts USA: University of Massachusetts Lowell, pg. 292-309. This paper provided an overview of data quality measures using a semiotic approach, explaining each semiotic level and how they are interlinked to data quality. The semiotic theory discussed is similar to the one later adopted by the ISO 8000-8 standard for data quality.

References

- ↑ Pg. 2, 2006 ed. Data Quality: Concepts, methodologies and Techniques, Carlo Batini & Monica Scannapieca

- ↑ Pg. 3, 2014 ed. Building a Successful Data Quality Management Program, Knowledgent

- ↑ Pg. 3, 2014 ed. Building a Successful Data Quality Management Program, Knowledgent

- ↑ 2017 ed. ISO 8000-2: Data Quality - Part 2: Vocabulary, ISO

- ↑ 2017 ed. ISO 8000-2: Data Quality - Part 2: Vocabulary, ISO

- ↑ 2017 ed. ISO 8000-2: Data Quality - Part 2: Vocabulary, ISO

- ↑ Pg. 6, 2006 ed. Data Quality: Concepts, methodologies and Techniques, Carlo Batini & Monica Scannapieca

- ↑ Pg. 298, 1998. Understanding Data Quality in a Data Warehouse: A Semiotic Approach, Shanks, G and Darke, P.

- ↑ 2015 ed. ISO 8000-8: Data Quality - Part 8: Information and data quality: Concepts and measuring, ISO

- ↑ Pg. 303, 1998. Understanding Data Quality in a Data Warehouse: A Semiotic Approach, Shanks, G and Darke, P.

- ↑ Pg. 301, 1998. Understanding Data Quality in a Data Warehouse: A Semiotic Approach, Shanks, G and Darke, P.

- ↑ Pg. 303, 1998. Understanding Data Quality in a Data Warehouse: A Semiotic Approach, Shanks, G and Darke, P.

- ↑ Pg. 302, 1998. Understanding Data Quality in a Data Warehouse: A Semiotic Approach, Shanks, G and Darke, P.

- ↑ Pg. 3, 2014 ed. Building a Successful Data Quality Management Program, Knowledgent

- ↑ Pg. 2, 2015 ed. ISO 8000-61: Data quality management: Process reference model, ISO

- ↑ Pg. 2, 2015 ed. ISO 8000-61: Data quality management: Process reference model, ISO

- ↑ Pg. 2, 2015 ed. ISO 8000-61: Data quality management: Process reference model, ISO

- ↑ Pg. 2, 2015 ed. ISO 8000-61: Data quality management: Process reference model, ISO

- ↑ Pg. 3, 2015 ed. ISO 8000-61: Data quality management: Process reference model, ISO