Biases in Project Management and How to Overcome Them with the Two Systems of Thinking

Written by Bjarki Rúnar Sverrisson

Contents |

Abstract

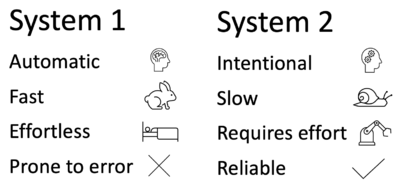

The Israeli-American 2002 Nobel Prize winner, Daniel Kahneman introduced the two systems of thinking in his book, „Thinking Fast and Slow“. He argues that every single decision a human being makes is made by one of two systems in the brain. He calls them System 1 and System 2. The systems have their own abilities, limitations, and functions. System 1 thinking is automatic, intuitive, and effortless while System 2 thinking is intentional, analytical, slow and requires a lot of effort.

In project management, the decision-making process can be influenced by many things e.g. biases of the people involved in the project. System 1 thinking can for example result in biases like the optimism bias, where people tend to be overly optimistic about the outcomes of planned action, and the uniqueness bias, where people have the tendency to see themselves or their project as more remarkable or special than it is. System 2 thinking can help people overcome these biases by analyzing data more thoroughly and making decisions based on a comprehensive understanding of the project's goals, risks, and opportunities.

Project managers should actively seek out varied perspectives and data sources, and participate in purposeful thought to reduce the biases brought on to them by System 1 thinking. They should also create decision-making processes that encourage critical thinking and exploration of alternative options. By being aware of the two systems of thought and the biases they imply, project managers can make better judgments and acheive projects more successfully. By adopting a deliberate thinking approach and implementing decision-making processes that encourage critical thinking, project managers can mitigate the biases associated with System 1 thinking and create a more effective project management process.

This article will focus on how the two systems of thinking and biases relate to decision-making in project management, and how project managers can overcome biases with System 2 thinking.

The Two Systems of Thinking

Daniel Kahneman, an Israeli-American psychologist, economist and 2002 Nobel Prize winner introduced the two systems of thinking in his book „Thinking Fast and Slow“. These two systems are referred to as System 1 and System 2. They are two different modes of thinking and Kahneman argues that every single decision a human being makes is made by either of them. The systems have their own abilities, limitations, and functions.

System 1 thinking is automatic, intuitive, fast and effortless. It is often associated with patterns and emotions. It is responsible for unconscious decisions and can lead to errors and biases because it operates with shortcuts and heuristics rather than careful analysis. However, it is essential for survival as it can process a lot of information quickly and without effort. It is useful for making the fast and effortless decisions in live. For example, if we see a snake then System 1 thinking immediately makes us react. Operations of System 1 thinking is often beyond our control as it operates without conscious awareness. It is therefore hard to detect when its thinking is causing biases. Therefore, it is very important to be aware of the biases associated with System 1 thinking. Examples of activities that are associated with System 1 thinking are [1]:

- Detect that an object is further away than another object.

- Detect hostility in a voice.

- Answer the result of 2 + 2.

System 1 thinking is very influential in a lot of every day scenarios. For example in the marketing industry Companies rely on automatic and feel-driven thoughts of System 1 to sell their products. The product´s advertisements are not just information about the product but also about trying to establish emotional associations that will stick in customer’s heads and make them buy the product without a second thought. This works especially well for cheap products as customers tend to engage with System 2 thinking for expensive products. System 1 automatic nature has been addressed as an issue for insufficient retirement savings. For example, behavioural economists in the United States observed that when workers receive a pay raise, they do not boost their saving rate. That is attributed to an excessive reliance on System 1 thinking.

In 1995 the popularity of the M&M candy was decreasing. The company´s creative director got the idea to introduce a character for each M&M colour where the character represented emotions e.g., Red as the sarcastic one, Yellow as the happy one and Blue as the cool one. The character become so popular that when they were removed from advertisements, A lot of customers would ask for them back which then happened. By developing these memorable characters, the company was successful in establishing M&M into the customer's System 1 thinking [2].

System 2 thinking is intentional, analytical, slow and requires a lot of effort. Its operations are often associated with choice and concentration. Its thinking is more accurate, reliable, and less prone to biases than System 1 thinking but is not used all the time as it requires a lot more effort and time than System 1 thinking. According to Kahneman, most people tend to be lazy thinkers and seek to System 1 thinking whenever it is possible. Examples of activities that are associated with System 2 thinking are:

- Compare the price of two products.

- Focus on the voice of some specific person in a crowded and loud room.

- Answer the result of 155 x 139.

The main differences between System 1 and System 2 can be seen on figure 1.

People identify themselves with System 2 as it decides what to think about and what to do. However we rely a lot more on System 1 in our daily lives as it saves us time and effort [1]. In fact, 98% of our thinking is done by System 1, leaving only 2% of our thinking to System 2 [3]. When accuracy and reliability are crucial it is essential to use System 2 thinking to overcome the biases of System 1. It is therefore important for project managers to understand the two systems of thinking as it helps them being aware of biases that can be present if the reliance on System 1 is too much. By being aware of that, they can reduce biases which results in better decision making [1].

Biases in Project Management

Project management is the application of knowledge, skills, tools and techniques to activities of a project with the objectives to meet the project requirements, delivering successful outcomes and create value for the organization and stakeholders. A project is a temporary endeavour that has a unique goal. It can stand alone or be part of a program or portfolio. The person assigned by the performing organization to lead the project team and the one responsible for achieving the project goals is called the project manager. Project managers are responsible for facilitating the project team and managing processes to deliver indented outcomes [4]. Biases can impact decision-making in project managament significantly and it can lead to errors and mistakes. Biases can impact the project manager to overlook important information and make desicions that are not in the best interest of the project. Project managers have the opportunity to be better informed and make better decisions by being aware of biases [1]. Number of biases identified by behavioral scientists is enourmous. The list of cognative biases on Wikipedia contains more than 200 items [5]. Therefore it is not realistic to expect project managers to be aware of every bias there is but by being aware of when the most common biases in project management and System 1 may be influencing the decision-making, project managers can minimise their effects and make their decisions based logical information rather than predetermined beliefs and limited perspectives. This leads to better decision making and more successful project outcomes [1]. In this section, the five most important behavioural biases in project management will be discussed [5].

Strategic misrepresentation

Strategic misrepresentation is also referred to as, political bias, strategic bias, power bias or the Machiavelli factor. This bias is the tendency to purposefully and methodically alter or misrepresent information for strategic reasons. The bias is common when the ultimate goal is considered more important that the methods that are used to achieve that goal. The bias can be traced to agency problems and political-organizational pressures, such as competition for limited resources or trying to gain a more prestigious position within the organization. Megaprojects are especially likely to be affected by strategic misrepresentation. That is because the bigger and more expensive the projects are, the more attention and involvement is from the top management which leads to more opportunities for political-organizational pressure.

A senior consultant at a Big-Four firm explained how strategic misrepresentation works in practice. When building his transport economics and policy group, he conducted feasibility studies as a subcontractor to engineers. To him it was clear that the engineers just wanted to justify the project. He asked one of the engineers why they consistently underestimated project costs. The engineer replied that if they gave the true expected cost, nothing would be build. This example shows how strategic misrepresentation works in practice. The bias is used to achieve a specific strategic objective which is in this case is securing funding and justifying the project [5].

Optimism bias

Optimism bias is a cognitive bias and is the tendency to be overly optimistic about the outcomes of planned action. This bias is non-deliberate i.e., people are affected by it unconsciously. It causes people to make decisions based on ideal vision of the future rather than a rational assessment of gains, losses, and probabilities. The bias leads to overestimation of positive events and underestimation of negative ones. For example, overestimation of benefits and underestimation of cost, leading to projects to not go as expected. While optimism can be good, it can be negative as well if people take risks that would have been avoided if they had they known the rational odds. Successful leaders have rare combination of hyper realism and can-do optimism. They plan for the worst-case scenario and remain optimistic [5].

Uniqueness bias

Uniqueness bias is the tendency that people see themselves as more remarkable and special than they are in fact e.g., healthier, smarter or, more attractive. In project management, it can be the tendency of planners and managers to see their projects as more remarkable than they are. This bias tends to prevent project managers from learning from other projects as they think the have little to learn from them as their project is unique. That has negative effects on the project success as project managers who see their projects as unique from other perform significantly worse than other project managers.

A project may be unique in its own specific geography and time. For example, A high-speed line has never been built in California before so a project of building a high-speed line there could be unique in that sense. But the project is only unique in California. Multiple high-speed lines have been built in other places, and the people handling the project of building high-speed lines in California could learn from those projects [5].

Planning fallacy

The planning fallacy is a type of optimism bias. It is when people have the tendency to estimate unrealistically close to the best-case scenario. It can be for example underestimation of cost, risk and task completion time, or overestimation of benefits and opportunities. Kahneman identified this bias as arising from a tendency to neglect distributional information while planning. Those who are made to feel in power are more likely to underestimate the task completion time than those who do not feel in power, meaning that the planning fallacy effects people in power more than those who are not in power. It is crucial for the project manager to avoid the planning fallacy and convex risks as they can have negative effects on the project's success [5].

Overconfidence bias

Overconfidence bias is the tendency of people to have excessive confidence in the own answers to question while disregarding the uncertainty in the world and their ignorance of it. It leads to people overestimating how much they know and underestimating their lack of knowledge[5]. The main problem with overconfidence is that it leads to incorrect decisions. A good strategy in a complex environment is to learn through mistakes using feedback. Overconfident people rarely get feedback from their judgements [1].

Overcoming biases with System 2 thinking

Project managers can avoid biases associated with System 1 thinking by engaging System 2 thinking. That involves taking more careful and radical approach to decision making where all possibilities and outcomes are considered, information is analysed carefully and there is communication with team members. This leads to project managers making better and more informed decisions. System 2 thinking can help project managers evaluate project risks more successfully by helping them consider all possible scenarios and possibilities rather than just relying on their instinctive. Additionally, System 2 thinking allows project managers to explain their thoughts and reasons for decisions to team members, making the work environment more positive and collaborative. System 2 requires effort, so project managers need to try to actively focus by regularly slowing down and carefully considering the information that they have while trying to recognize when the automatic thinking of System 1 could be leading them too errors. By consciously overriding their initial instinctive with radical and thoughtful thinking, they can engage System 2 and make more informed and better decisions.

Techniques described by Kahneman to overcome biases by engaging System 2 thinking are the following [1]:

- Deliberate thinking. This is consciously putting in effort to engage in slower and more effortful thinking process. This involves taking time to slow down and consider the information at hand carefully while trying to resist the temptation to rely on the automatic instincts of System 1

- Asking questions. By asking themselves questions, project managers can engage more critical and analytical approach to the decision making and information they are considering. Example of those questions are:

- What information do I have to support this decision?

- Are there any alternative reasons for that information?

- What are the potential risks and benefits of this decision?

- What would people that do not agree with this decision say about it?

- Challenge assumptions. Actively challenge assumptions and biases and check alternative options.

- Consider multiple perspectives. By considering multiple perspectives and, using information and data from different sources, helps project managers gain more complete understanding of the project or problem at hand.

- Use decision tools. Using decision tools like decision trees and chekclists can help project managers to engage System 2 thinking by forcing them to consider all the necessary data and information

- Seek feedback from others. By asking for feedback from team members or others gives project managers different opinions that can challenge their initial beliefs and assumptions. This can help them realize biases that may be at hand.

- Analyse data thoroughly. By analysing data and information thoroughly, it is likelier that the decision will be more based on that than intuitions and heuristics that can include biases.

- Analyse the statistics. Analysing the statictics like probabilities carefully can help project managers make more informed decisions that are based on the statistics rather than assumptions and heuristics

- Analyse past experiences. Analysing past experiences that include similar decisions can help project managers identify biases that may have influenced their decision making processes in the past.

Limitations of the Two Systems of Thinking

The two systems of thinking have their limitations. Both System 1 and System 2 can be affected by emotions so if a person is angry, it is more likely that it will make a decision based on anger even if it is not the logical choice to make. Even when engaging with System 2 thinking, it is possible that biases will influence the decision making. This can happen because some biases are so deeply installed and automatic that they can be very difficult to recognize and overcome. System 2 has limited attention and capacity so engaging with System 2 thinking requires a lot of effort and it can be mentally hard to sustain it over a long period of time. It should be kept in mind that it is impossible to analyse all possible outcomes and their likelihoods with certainty as the information and data are not perfect so even when applying System 2 thinking effectively to eliminate all possible biases, the decision can still go wrong [1]. Like discussed before in this article, thorough analysis of information is one of the main techniques to engage with System 2 thinking. A downside to this is that repeated exposure to some information can trigger the illusory truth effect. That is when individuals begin to believe something because they have been repeatably exposed to that information. Sometimes the information isn´t even true but has been repeated so often to that individual that he believes it to be true. In an experiment made in 1977, participants got statements that were both true and false and were asked to rate their truthfulness. In this experiment it was found out that participants rated statements that were repeated often as more truthful than statements that were not [6]. Both System 1 and System 2 are important for the decision-making process to be effective, so the key is to understand how to engage with System 2 and when it is appropriate [1].

Annotated bibliography

Daniel Kahneman. (2011). Thinking, Fast and Slow. Penguin Books.

The primary reference in this article is the book „Thinking fast and slow“, written by Nobel Prize winning psychologist and economist, Daniel Kahneman. From decades of experience in psychology and behavioural economics, Kahneman explains the Two Systems of thinking and how they influence people‘s decision making processes. Furthermore it goes into details of cognitive biases and limitations of decision making. The book provides multiple examples on how these systems influence our judgements and thoughts in different scenarios. It is a must read for anyone that is interested in improving their decision making.

Bent Flyvbjerg. (2021). Top-Ten Behavioral Biases in Project Management: An Overview. Project Management Journal, vol. 52, no. 6, 531–546. doi:10.1177/87569728211049046

„Top Ten Behavioral Biases in Project Management“ is a key reference in this wiki artice. It identifies the ten most important behavioral biases for project management. Each bias is talked about in detail and its impact on project management is explained with examples. Additionally it discusses why base rate neglect is one of the primary reasons that a project underperforms. In this wiki article the five most important behavioral biases for project management are discussed and for those who are interested in going into more detail about them as well as reading about the next five on the list, are recommended to read this article.

Project Management: A guide to the Project Management Body of Knowledge (PMBOK guide), 7th Edition. (2021). Project Management Institute, Inc.

Project Management: A guide to the Project Management Body of Knowledge (PMBOK guide), 7th Edition, published by the Project Management Institute, is essential for people that are interested in project management. This guide provides a good overview of project management processes, tools, and techniques that will help a project become a success. It talks about industry changes and good practices that are great instructions for project managers, teams, and stakeholders. This guide is essential for anyone interested in project management.

References

- ↑ 1.0 1.1 1.2 1.3 1.4 1.5 1.6 1.7 1.8 Daniel Kahneman. (2011). Thinking, Fast and Slow. Penguin Books.

- ↑ System 1 and System 2 Thinking. (n.d.). The Decision Lab. https://thedecisionlab.com/reference-guide/philosophy/system-1-and-system-2-thinking

- ↑ Astrid Groenewegen. (n.d.). Kahneman Fast And Slow Thinking Explained. SUE Behavioural Design. https://suebehaviouraldesign.com/kahneman-fast-slow-thinking/

- ↑ Project Management: A guide to the Project Management Body of Knowledge (PMBOK guide), 7th Edition. (2021). Project Management Institute, Inc. https://findit.dtu.dk/en/catalog/61f92f6644ccbf17cdd9f5c1

- ↑ 5.0 5.1 5.2 5.3 5.4 5.5 5.6 Bent Flyvbjerg. (2021). Top-Ten Behavioral Biases in Project Management: An Overview. Project Management Journal, vol. 52, no. 6, 531–546. doi:10.1177/87569728211049046

- ↑ Jessica Udry, Sara K. White, Sarah J. Barber. (2022). The effects of repetition spacing on the illusory truth effect. Cognition. doi:10.1016/j.cognition.2022.105157