Mindfulness and Cognitive Biases in Project Management

Before introducing the concept of mindfulness and cognitive biases in relation to project management, a short introduction to complexity and the thinking behind ‘engineering systems’ are necessary, as to explain its relevance and usefulness.

Contents |

Complexity

Even among scientists, there is no unique definition of Complexity (Johnson, 2009). Instead, real-world systems of what scientist believe to be complex, have been used to define complexity in a variety of different ways, according to the respective scientific fields. Here, a system-oriented perspective on complexity is adopted in accordance to the definition in Oehmen et al. (2015), which list the properties of complexity to be:

- Containing multiple parts;

- Possessing a number of connections between the parts;

- Exhibiting dynamic interactions between the parts; and,

- The behaviour produced as a result of those interactions cannot be explained as the simple sum of the parts (emergent behaviour)

In other words, a system contains a number of parts that can be connected in different ways. The parts can vary in type as well as the connections between them can. The number and types of parts and the number and types of the connections between them, determines the complexity of the system. Furthermore, incorporating a dynamic understanding of systems, both the parts and their connections changes over time. The social intricacy of human behaviour are one of the larger reasons for this change. We do not always behave rationally and predictably, as will be further examined below, which increases the complexity of every system where humans are involved.

Engineering systems

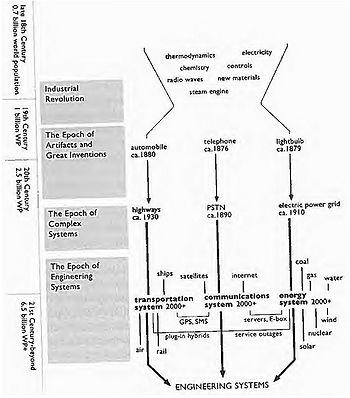

The view that our modern lives are governed by engineering systems, articulated by Weck, Roos, and Magee (2011), are adopted to explain why the complexity of the world only goes one way, upwards. In short, they distinguish between three levels of systems; artifact, complex, and engineering. At artifact level we have inventions such as, cars, phones, the light bulb, etc. In itself, they do not offer any real benefits to our lives. To utilize their potential, and to exploit their respective benefits, the right infrastructure has to be present. Following the above example, the needed infrastructure consists of; roads, public switched telephone network (PSTN), and the electrical power grid. This level of interconnectedness are defined as the complex system level. Following the development of the earlier separated transportation, communication, and the energy systems, one sees that everything is getting increasingly interconnected. This is the engineering systems level. See Figure 1 for a visual representation of the above example.

One of the characteristics of our modern society and the engineering systems governing it, is that technology and humans cannot any longer be separated. Engineering systems is per definition socio-technical systems, which as described in the section above, only adds to the complexity. “Without the right tools to analyse and understand them, complex systems become complicated: They confuse us, and we cannot control what happens or understand why” (Oehmen et al., 2015, p. 5). Mindfulness is one of those tools that can be adopted to project management to help decomplicate the complexity.

Mindfulness

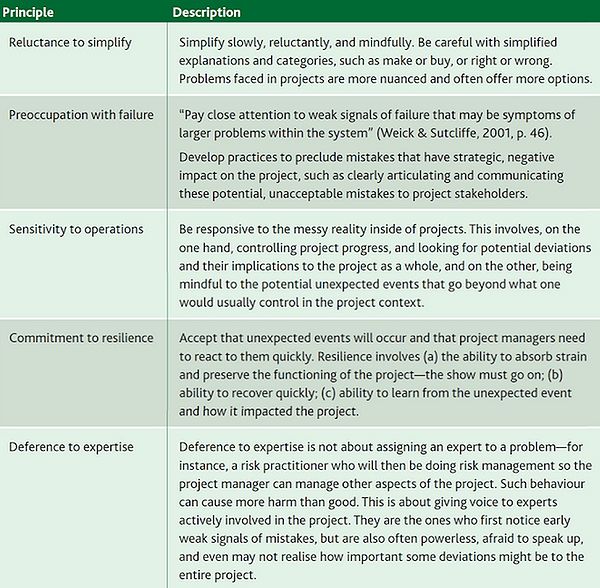

The meaning of the word mindfulness is defined as: The quality or state of being conscious or aware of something (http://www.oxforddictionaries.com). Following definition is elaborating further: “a rich awareness of discriminatory detail. By that we mean that when people act, they are aware of context, of ways in which details differ (in other words, they discriminate among details), and of deviations from their expectations” (Weick & Sutcliffe, 2001, p. 32). To translate this into a useful model, Weick & Sutcliffe (2001) developed five principles of mindfulness, that could be used to increase the reliability of organizations. In other words, to enhance both the chance that an organization can prevent possibly disastrous unexpected events, as well as making a rapid recovery if they do happen. These principles are therefore well suited to deal with the intrinsic uncertainty of human behaviour and the complexity it brings to organizations. Oehmen et al. (2015) have adapted the five principles to the field of project management, seen in Table 1.

The principle of sensitivity to operations is chosen for further elaboration. The reason lies in the last part of the description: “being mindful to the potential unexpected events that go beyond what one would usually control in the project context”. The “thing” that goes beyond what one would usually control, is in this context chosen to be our own mind. It is well known that cognitive biases exist, and that the deviousness of the mind can constitute a risk to any project. But how can we discipline our mind to think sharper and clearer? How can we make sure that our rational decisions are in fact rational? And, are some people more predispositioned to get caught by cognitive biases than others? Answering these questions will be the focus of the remainder of the article.

To the reviewer

When i write a raport or article i always This is as far as i have gotten with the finished text. The rest of the article is still in

The Cognitive Reflection Test (CRT)

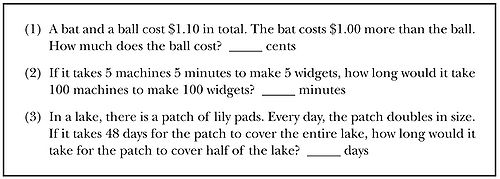

Before reading on… answer the 3 questions...etc.

The two ways of thinking

- Introduce system 1 and 2 the same way it is done in the book. By showing a picture for system 1 and with a math problem for system 2.

- short definitions on 20 bottom-21 top

- elaborate with examples of what system 1 and 2 does

- list of examples: page 21 for system 1 and 22 for system 2

- Draw reference to that system 1 is an innate skill shared with other animals. born to perceive the world around us - page 21 bottom

- describe the interaction between system 1 and 2

- good description on page 24-25 in “Plot Synopsis”

- 1 is involuntary and automatic with no or little effort.

- 2 requires effort and is normally in a comfortable low-effort mode

- etc.

- division of labour

- “The often-used phrase “pay attention” is apt: you dispose of a limited budget of attention that you can allocate to activities, and if you try to go beyond your budget, you will fail.” - page 23 middle

- follow with: example with the gorilla - side 23(nederst)-24.

- Illustrates two important facts about our minds: we can be blind to the obvious, and we are also blind to our blindness.

- good description on page 24-25 in “Plot Synopsis”

System 1

- it has little understanding of logic and statistics

- it cannot be turned off

- gullible and biased to believe and confirm

- generates surprisingly complex patterns of ideas

- generates impressions, feelings, and inclinations; when endorsed by system 2, these become beliefs, attitudes, and intentions

- operates automatically and quickly, with little or no effort, and no sense of voluntary control

- can be programmed by system 2 to mobilize attention when a particular pattern is detected (like the illusion with the arrows)

- creates coherent pattern of activated ideas in associative memory

- distinguishes the surprising from the normal

- infers and invent cause and intentions (like seeing patterns that are not necessarily there)

- neglects ambiguity and suppresses doubt (like the ABC or A13C)

- etc.

- the rest is on page 105, but only include what you talk about, or at least mention with a * which ones the reader can read about in the book themselves.

System 2

- in charge of doubting and unbelieving

- only system 2 can construct thoughts in an orderly series of steps.

- system 2 can take over and overrule the freewheeling impulses of system 1

- To exert self-control

- has a limited amount of attention to allocate

- is generally lazy - follows the rule of least effort

- etc.

Conflict and Illusions

- Show the figure with RIGHT left upper LOWER

- explain the result of the conflict of the two systems - page 25-26

- Show the famous Müller-Lyer illusion - page 27

- Show the 3D illusion - page 100

- Explain the conclusion - page 28

- Even when cues to likely errors are available, errors can be prevented only by the enhanced monitoring and effortful activity of system 2. …etc... The best we can do is a compromise: learn to recognize situations in which mistakes are likely, and try harder to avoid significant mistakes when the stakes are high.

CRT - Conclusion

- Shane Frederick constructed a cognitive reflection test which consist of the bat and ball problem and two similar, which all have that in common that they have intuitive answers that are both compelling and wrong. Found that people who scores very low in these tests (supervisory function of system 2 is weak in these people) are prone to answer questions with the first idea that comes to mind and unwilling to invest the effort needed to check their intuitions. these people are more prone to accept and follow other suggestions from system 1. - page 48

- read on to get examples/tests that confirm this

- conclusion: system 1 is impulsive and intuitive; system 2 is capable of reasoning, and it is cautious, but at least for some people it is also lazy. We recognize related differences among individuals: some people are more like their system 2; others are closer to their system 1. This simple test has emerged as one of the better predictors of lazy thinking.

- Keith Stanovich and Richard West originally introduced the terms system 1 and 2. … They wanted to find the answer to the question: What makes some people more susceptible than others to biases of judgement? … etc... They found that the bat and ball question and other similar are somewhat better indicators of our susceptibility to cognitive errors than conventional measures of intelligence such as IQ. - side 48 nederst - 49

- the thing that is contained in the “etc.” is the concept of “rationality”. whether this should be included in the article i don’t know yet

- Refer to the test ‘the reader’ took earlier, and urge those who got “tricked” by their system 1 to pay extra attention in real-life situations where they feel the urge to let system 1 answer important questions or take decisions with high risk.

Common and Powerful Cognitive Biases

- Start by explaining associative thinking

- find material on page 50-52 for the basic

- end with:

- … only a few of the activated ideas will register in consciousness; most of the work of associative thinking is silent, hidden from our conscious selves. The notion that we have limited access to the workings of our minds is difficult to accept because, naturally, it is alien to our experience, but it is true (find the proof): you know far less about yourself than you feel you do. - side 52 midten

- Priming effect - Finish the word SO_P: EAT primes SOUP, WASH primes SOAP etc. - side 52 nederst

- ideomotor effect (priming phenomenon): The influencing of an action by an idea - side 53-54

- e.g. asked to think about words that could be associated with old age, made participants walk slower right after (most didn’t actively see/spot the connection to old age)

- e.g. when asked to walk extremely slow for 5 min, the participants much quicker related the words to the theme “old age”

- ideomotor effect (priming phenomenon): The influencing of an action by an idea - side 53-54

This is called reciprocal priming effects: if you were primed to think of old age, you would tend to act old, and acting old would reinforce the thought of old age.

- another is: being amused tend to make you smile, and smiling tends to make you feel amused

- another yet, see example page 54 in the bottom. shaking head made prime for rejections of a message, nodding primed for acceptance. without the participants being aware

- in general: you have no awareness that this is happening! This is because priming phenomenon arise in system 1, and you have no conscious access to them - side 57

- a good formulation about how disbelief about priming effects is not an option. and an argument for why you have to accept that this happens to you too - side 57 midterste paragraf

Read chapter 6 it should be about seeing causes and intentions maybe Nasim Taleb’s narrative fallacy fits here Jumping to conclusions very good description of when the jumping to conclusions (system 1) is good; and when bad, how system 2 can be used to prevent it. - side 79 show the figure on page 79 When faced with ambiguity: When uncertain, system 1 bets on an answer, and the bets are guided by experience. The rules of betting are intelligent: recent events and the current context have the most weight in determining an interpretation. When no recent event comes to mind, more distant memories govern. - side 80 An important point is that you are not even aware that this choice between “bets” are made, only the interpretation. System 1 does not keep track of alternatives that it rejects, or even the fact that there were alternatives. This gives a natural transition to WYSIATI What you see is all there is (WYSIATI) - Focuses on existing evidence and ignores absent evidence system 1 excels at constructing the best possible story that incorporates ideas currently activated, but it does not (cannot) allow for information it does not have. The measure of the success for system 1 is the coherence of the story it manages to create. The amount and quality of the data on which the story is based are largely irrelevant. When information is scarce, which is a common occurrence, system 1 operates as a machine for jumping to conclusions. -side 85 WYSIATI can help explain many biases, e.g overconfidence and framing effects - side 87-88 I (martin) guess that the halo effect also reflects WYSIATI. jumps to conclusions and tells the story from scarce information (first impressions) Nassim Taleb introduced the notion of a narrative fallacy to describe how flawed stories of the past shape our views of the world and our expectations for the future. - side 199 … At work here is that powerful WYSIATI rule. You cannot help dealing with the limited information you have as if it were all there is to know. you build the best possible story from the information available to you, and if it is a good story, you believe it. Paradoxically, it is easier to construct a coherent story when you know little, when there are fewer pieces to fit into the puzzle. - 201 midten use this to transition to The Halo Effect Exaggerated emotional coherence (The Halo Effect) - side 82-84 The tendency to like (or dislike) everything about a person, including things you have not observed. The halo effect increases the weight of first impressions, sometimes to the point that subsequent information is mostly wasted. This is a common bias that plays a large role in shaping our views of people and situations. It is one of the ways the representation of the world that system 1 generates is simpler and more coherent than the real thing. Solomon Asch experiment with Allan and Ben - side 82 the halo effect is also an example of suppressed ambiguity Shortly explain the mental shotgun and refer ‘the reader’ to the RIGHT left upper LOWER, which is the mental shotgun in effect The mental shotgun: We often compute much more than we want or need. I call this excess computation the mental shotgun. It is impossible to aim at a single point with a shotgun because it shoots pellets that satter, and it seems almost equally difficult for system 1 not to do more than system 2 charges it to do. -side 95 Substituting questions: When faced with a difficult question, “the mental shotgun” makes it easy to generate a quick answer. the process roughly is: when facing the difficult question, the mental shotgun (in system 1) generates many associations/ideas and an easier question related to the original is likely to be invoked and then answered. This heuristic provides an of-the-shelf answer to the difficult question. System 2 can then be activated to evaluate whether the answer to the easy question actually also answers the difficult one. … system 2 often follows the path of least effort and endorse the intuitive answer. you may not even be aware that you didn’t answer the original question. - side 98-99 examples on page 98